Transcription of C o v e r f e a t u r e Amdahl’s Law in the Multicore Era

1 Amdahl s Law in the Multicore EraAs we enter the Multicore era, we re at an inflection point in the computing landscape. Computing vendors have announced chips with multiple processor cores. Moreover, vendor road maps promise to repeatedly double the number of cores per chip. These future chips are variously called chip multiprocessors, Multicore chips, and many-core must subdue more degrees of freedom for Multicore chips than for single-core designs. They must address such questions as: How many cores? Should cores use simple pipelines or powerful multi-issue pipeline designs? Should cores use the same or different micro-architectures? In addition, designers must concurrently manage power from both dynamic and static answering these questions for today s multi-core chip with two to eight cores is challenging now, it will become much more challenging in the future.

2 Sources as varied as Intel and the University of California, Berkeley, predict a hundred,1 if not a thousand,2 the Amdahl s Law sidebar describes, this model has important consequences for the Multicore era. To complement Amdahl s software model, we offer a corol-lary of a simple model of Multicore hardware resources. Our results should encourage Multicore designers to view the entire chip s performance rather than focusing on core efficiencies. We also discuss several important limitations of our models to stimulate discussion and future COrOllAry fOr Multicore Chip COStTo apply Amdahl s law to a Multicore chip, we need a cost model for the number and performance of cores that the chip can support. We first assume that a Multicore chip of given size and technology generation can contain at most n base core equivalents, where a single BCE implements the baseline core.

3 This limit comes from the resources a chip designer is willing to devote to processor cores (with L1 caches). It doesn t include chip resources expended on shared caches, interconnection networks, memory controllers, and so on. Rather, we simplistically assume that these nonprocessor resources are roughly constant in the mul-ticore variations we are agnostic on what limits a chip to n BCEs. It might be power, area, or some combination of power, area, and other , we assume that (micro-) architects have techniques for using the resources of multiple BCEs to create a core with greater sequential performance. Let the performance of a single-BCE core be 1. We assume that architects can expend the resources of r BCEs to create a powerful core with sequential per-formance perf(r).

4 Architects should always increase core resources when perf(r) > r because doing so speeds up both sequential and parallel execution. When perf(r) < r, however, the trade-off begins. Increasing core performance aids sequential execution, but hurts parallel Amdahl s law with a corollary for Multicore hardware makes it relevant to future generations of chips with multiple processor cores. Obtaining optimal Multicore performance will require further research in both extracting more parallelism and making sequential cores D. Hill, University of Wisconsin-MadisonMichael R. Marty, Google0018-9162/08/$ 2008 IEEE Published by the IEEE Computer Society July 2008 33C o v e r f e a t u r eAuthorized licensed use limited to: Auburn University. Downloaded on January 12, 2009 at 17:26 from IEEE Xplore.

5 Restrictions apply. 34 ComputerOur equations allow perf(r) to be an arbitrary func-tion, but all our graphs follow Shekhar Borkar3 and assume perf(r) = r. In other words, we assume efforts that devote r BCE resources will result in sequential performance r. Thus, architectures can double per-formance at a cost of four BCEs, triple it for nine BCEs, and so on. We tried other similar functions (for example, r1 5.), but found no important changes to our Multicore ChipSA symmetric Multicore chip requires that all its cores have the same cost. A symmetric Multicore chip with a resource budget of n = 16 BCEs, for example, can sup-port 16 cores of one BCE each, four cores of four BCEs each, or, in general, n/r cores of r BCEs each (our equa-tions and graphs use a continuous approximation instead of rounding down to an integer number of cores).

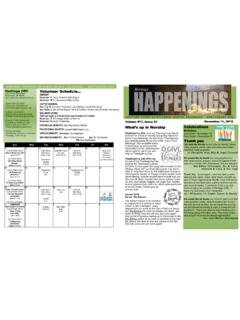

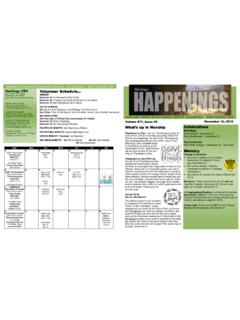

6 Figures 1a and 1b show two hypothetical symmetric Multicore chips for n = 16. Under Amdahl s law, the speedup of a symmetric Multicore chip (relative to using one single-BCE core) depends on the software fraction that is parallelizable (f), the total chip resources in BCEs (n), and the BCE resources (r) devoted to increase each core s perfor-mance. The chip uses one core to execute sequentially at performance perf(r). It uses all n/r cores to exe-cute in parallel at performance perf(r) n/r. Overall, we get:Speedupsymmetricf nrfperfrf r, ,( )=+ ( ) 11pperfr n( ) Consider Figure 2a. It assumes a symmetric multi-core chip of n = 16 BCEs and perf(r) = r. The x-axis Amdahl s lawEver yone knows Amdahl s law, but quickly for-gets it. Thomas Puzak, IBM, 2007 Most computer scientists learn Amdahl s law in school: Let speedup be the original execution time divided by an enhanced execution The modern version of Amdahl s law states that if you enhance a fraction f of a computation by a speedup S, the overall speedup is:Speedupenhancedf SffS,( )= ( )+11 Amdahl s law applies broadly and has important corollaries such as:Attack the common case: When f is small, optimi-zations will have little effect.

7 The aspects you ignore also limit speedup: As S approaches infinity, speedup is bound by 1/(1 f).Four decades ago, Gene Amdahl defined his law for the special case of using n processors (cores) in parallel when he argued for the single-processor approach s validity for achieving large-scale computing He used a limit argument to assume that a fraction f of a program s execution time was infinitely parallelizable with no scheduling overhead, while the remaining fraction, 1 f, was totally sequential. Without presenting an equation, he noted that the speedup on n processors is governed by:Speedupparallelf nffn,( )= ( )+11 Finally, Amdahl argued that typical values of 1 f were large enough to favor single their simplicity, Amdahl s arguments held, and mainframes with one or a few proces-sors dominated the computing landscape.

8 They also largely held in the minicomputer and personal computer eras that followed. As recent technology trends usher us into the Multicore era, Amdahl s law is still s equations assume, however, that the computation problem size doesn t change when running on enhanced machines. That is, the frac-tion of a program that is parallelizable remains fixed. John Gustafson argued that Amdahl s law doesn t do justice to massively parallel machines because they allow computations previously intrac-table in the given time A machine with greater parallel computation ability lets com-putations operate on larger data sets in the same amount of time. When Gustafson s arguments apply, parallelism will be ample. In our view, how-ever, robust general-purpose Multicore designs should also operate well under Amdahl s more pessimistic 1.

9 Amdahl, Validity of the Single-Processor Approach to Achieving Large-Scale Computing Capabilities, Proc. Am. Federation of Information Processing Societies Conf., AFIPS Press, 1967, pp. 483-485. 2. Gustafson, Reevaluating Amdahl s Law, Comm. ACM, May 1988, pp. licensed use limited to: Auburn University. Downloaded on January 12, 2009 at 17:26 from IEEE Xplore. Restrictions apply. July 2008 35(a)(b)(c)Figure 1. Varieties of Multicore chips. (a) Symmetric Multicore with 16 one-base core equivalent cores, (b) symmetric Multicore with four four-BCE cores, and (c) asymmetric Multicore with one four-BCE core and 12 one-BCE cores. These figures omit important structures such as memory interfaces, shared caches, and interconnects, and assume that area, not power, is a chip s limiting resource.

10 (a)(b)(c)(d)(e)(f)204816r BCEsSymmetric, n = 16204816326412825650100150200250r BCEsSpeedupsymmetric50100150200250 Speedupsymmetric50100150200250 SpeedupdynamicSpeedupdynamicSymmetric, n = 25624816r BCEsAsymmetric, n = 162048163264128256r BCEsAsymmetric, n = 2562004816246810121416 Speedupsymmetric246810121416 Speedupsymmetric246810121416r BCEsDynamic, n = 162048163264128256r BCEsDynamic, n = 256f = = = = = 2. Speedup of (a, b) symmetric, (c, d) asymmetric, and (e, f) dynamic Multicore chips with n = 16 BCEs (a, c, and e) or n = 256 BCEs (b, d, and f).Authorized licensed use limited to: Auburn University. Downloaded on January 12, 2009 at 17:26 from IEEE Xplore. Restrictions apply. 36 Computergives resources used to increase each core s perfor-mance: a value 1 says the chip has 16 base cores, while a value of r = 16 uses all resources for a single core.