Transcription of Data Storage Infrastructure at Facebook - …

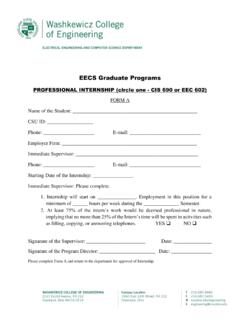

1 Data Storage Infrastructure at FacebookSpring 2018 Cleveland State UniversityCIS 601 PresentationYi DongInstructor: Dr. Chung Outline Strategy of data Storage , processing, and log collection Data flow from the source to the data warehouse Storage systems and optimization Data discovery and analysis Challenges in resource sharing Facebook s Architecture Facebook s ArchitecturePHPHipHop compilerScribeThriftHadoop HbaseHayStackHiveMySQLM emcached Part 1: Strategy for Data Storage , Processing, Log collection Apache Hadoop Apache Hive Scribe Hadoop, Why? Scalability Able to process multi petabyte datasets Fault Tolerance Node failure is expected everyday Number of nodes is not constant High Availability User can access from nearest node Cost Efficiency Open source Use commodity hardware as a node in Hadoop clusters Eliminates particular technology dependency Hadoop Architecture HDFS (Hadoop Distributed File System) Map-Reduce Infrastructure Hive SQL-like analysis tool (HiveQL)

2 On top of Hadoop Dramatically improve the productivity and usage for Hadoop With Hive, users without programming experience can use Hadoop for their work Without Hive, one basic Hadoop data manipulation, like GROUP BY will take >100 lines of Java/Python code Even worse, if the programmer does not have database knowledge, the code will likely use sub-optimal algorithm, often it is pretty sub-optimal Hive Architecture Scribe Scalable Logging System Distributed and scalable logging system Combined with HDFS Aggregate logs from thousands of web servers Part 2: Data Flow Architecture Two Sources of Data Web Server Log data Copy every 5-15 minutes Federated MySQL Information data Copy daily Two different clusters Production Hive-Hadoop cluster Ad-hoc Hive-Hadoop cluster Deal with Data Delivery Latency Even log data copied at 5-15 minutes interval, the loader will only load data into Hive native table at the end of the day Solution at Facebook : Use Hive s external table feature, create table meta data on the raw HDFS files After data loaded into Hive native table at the end of day, remove raw HDFS files from the external table New solutions are needed to enable continuously log data loading Part 3.

3 Storage Optimization All data need to compressed to save space Hadoop allows user specific codecs, Facebook using gzip codec to get compression factor at 6-7 HDFS by default use 3 copies of data to prevent data loss Using erasure codes, 2 copies of data and 2 copies of error correction code, this multiple can be brought down to Using Hadoop RAID on older data sets and keeping the newer data sets replicated 3 ways Part 3: Storage Optimization Reduce the memory usage by HDFS NameNode Trade off latency to reduce memory pressure Implement file format to reduce map tasks Data federation Distribute data based on time Data across time boundary will need more join Distribute data based on application Some of the common data have to be replicated Part 4.

4 Data Discovery and Analysis Hive Provide immense scalability to non-engineering users, such as business analysts, product managers Data discovery Internal tool to enable wiki approach for metadata creation Tools to extract lineage information from query log Periodic Batch Jobs For such job, inner job dependencies and ability to schedule such job are critical Part 5: Resource Sharing Support the co-existence of interactive jobs and batch jobs on the same Hadoop cluster Implement Hadoop Fair Share Scheduler Isolate ad-hoc queries and periodic batch queries Implement Scheduler to make it more aware of system resource usage caused by poorly written ad-hoc queries Take Home Message For a data warehouse design What kind of data source, flow architecture What kind of Storage architecture What kind of user, what kind of task How to make usage easier How to share the resource between jobs EndThank you