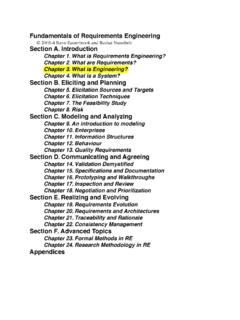

Transcription of Extracting and Composing Robust Features with Denoising ...

1 Extracting and Composing Robust Features with DenoisingAutoencodersPascal e de Montr eal, Dept. IRO, CP 6128, Succ. Centre-Ville, Montral, Qubec, H3C 3J7, CanadaAbstractPrevious work has shown that the difficul-ties in learning deep generative or discrim-inative models can be overcome by an ini-tial unsupervised learning step that maps in-puts to useful intermediate introduce and motivate a new trainingprinciple for unsupervised learning of a rep-resentation based on the idea of making thelearned representations Robust to partial cor-ruption of the input pattern. This approachcan be used to train autoencoders, and thesedenoising autoencoders can be stacked to ini-tialize deep architectures.

2 The algorithm canbe motivated from a manifold learning andinformation theoretic perspective or from agenerative model perspective. Comparativeexperiments clearly show the surprising ad-vantage of corrupting the input of autoen-coders on a pattern classification IntroductionRecent theoretical studies indicate that deep architec-tures (Bengio & Le Cun, 2007; Bengio, 2007) may beneeded toefficientlymodel complex distributions andachieve better generalization performance on challeng-ing recognition tasks. The belief that additional levelsof functional composition will yield increased repre-sentational and modeling power is not new (McClel-land et al.)

3 , 1986; Hinton, 1989; Utgoff & Stracuzzi,2002). However, in practice, learning in deep archi-tectures has proven to be difficult. One needs onlyAppearing inProceedings of the 25thInternational Confer-ence on Machine Learning, Helsinki, Finland, 2008. Copy-right 2008 by the author(s)/owner(s).to ponder the difficult problem of inference in deepdirected graphical models, due to explaining away .Also looking back at the history of multi-layer neuralnetworks, their difficult optimization (Bengio et al.,2007; Bengio, 2007) has long prevented reaping the ex-pected benefits of going beyond one or two hidden lay-ers.

4 However this situation has recently changed withthe successful approach of (Hinton et al., 2006; Hinton& Salakhutdinov, 2006; Bengio et al., 2007; Ranzatoet al., 2007; Lee et al., 2008) for training Deep BeliefNetworks and stacked key ingredient to this success appears to be theuse of an unsupervised training criterion to performa layer-by-layer initialization: each layer is at firsttrained to produce a higher level (hidden) represen-tation of the observed patterns, based on the repre-sentation it receives as input from the layer below,by optimizing a local unsupervised criterion. Eachlevel produces a representation of the input patternthat is more abstract than the previous level s, be-cause it is obtained by Composing more initialization yields a starting point, from whicha global fine-tuning of the model s parameters is thenperformed using another training criterion appropriatefor the task at hand.

5 This technique has been shownempirically to avoid getting stuck in the kind of poorsolutions one typically reaches with random initializa-tions. While unsupervised learning of a mapping thatproduces good intermediate representations of theinput pattern seems to be key, little is understood re-garding what constitutes good representations forinitializing deep architectures, or what explicit crite-ria may guide learning such representations. We knowof only a few algorithms that seem to work well forthis purpose: Restricted Boltzmann Machines (RBMs)trained with contrastive divergence on one hand, andvarious types of autoencoders on the present research begins with the question of whatExtracting and Composing Robust Features with Denoising Autoencodersexplicit criteria a good intermediate representationshould satisfy.

6 Obviously, it should at a minimum re-tain a certain amount of information about its input,while at the same time being constrained to a givenform ( a real-valued vector of a given size in thecase of an autoencoder). A supplemental criterion thathas been proposed for such models is sparsity of therepresentation (Ranzato et al., 2008; Lee et al., 2008).Here we hypothesize and investigate an additional spe-cific criterion:robustness to partial destructionof the input, , partially destroyed inputs shouldyield almost the same representation. It is motivatedby the following informal reasoning: a good represen-tation is expected to capture stable structures in theform of dependencies and regularities characteristic ofthe (unknown) distribution of its observed input.

7 Forhigh dimensional redundant input (such as images) atleast, such structures are likely to depend on evidencegathered from a combination of many input dimen-sions. They should thus be recoverable from partialobservation only. A hallmark of this is our humanability to recognize partially occluded or corrupted im-ages. Further evidence is our ability to form a highlevel concept associated to multiple modalities (suchas image and sound) and recall it even when some ofthe modalities are validate our hypothesis and assess its usefulness asone of the guiding principles in learning deep architec-tures, we propose a modification to the autoencoderframework to explicitly integrate robustness to par-tially destroyed inputs.

8 Section 2 describes the algo-rithm in details. Section 3 discusses links with otherapproaches in the literature. Section 4 is devoted toa closer inspection of the model from different theo-retical standpoints. In section 5 we verify empiricallyif the algorithm leads to a difference in 6 concludes the Description of the Notation and SetupLetXandYbe two random variables with joint prob-ability densityp(X,Y), with marginal distributionsp(X) andp(Y). Throughout the text, we will usethe following notation: Expectation:EEp(X)[f(X)] = p(x)f(x) :IH(X) =IH(p) =EEp(X)[ logp(X)]. Conditional entropy:IH(X|Y) =EEp(X,Y)[ logp(X|Y)].

9 Kullback-Leibler divergence:IDKL(p q) =EEp(X)[logp(X)q(X)]. Cross-entropy:IH(p q) =EEp(X)[ logq(X)] =IH(p) +IDKL(p q). Mutual infor-mation:I(X;Y) =IH(X) IH(X|Y). Sigmoid:s(x) =11+e xands(x) = (s(x1),..,s(xd))T. Bernoulli dis-tribution with mean :B (x). and by extensionB (x) = (B 1(x1),..,B d(xd)).The setup we consider is the typical supervised learn-ing setup with a training set ofn(input, target) pairsDn={(x(1),t(1))..,(x(n),t(n))}, that we supposeto be an sample from an unknown distributionq(X,T) with corresponding marginalsq(X) andq(T). The Basic AutoencoderWe begin by recalling the traditional autoencodermodel such as the one used in (Bengio et al.)

10 , 2007)to build deep networks. An autoencoder takes aninput vectorx [0,1]d, and first maps it to a hid-den representationy [0,1]d through a deterministicmappingy=f (x) =s(Wx+b), parameterized by ={W,b}.Wis ad dweight matrix andbis a bias vector. The resulting latent representationyis then mapped back to a reconstructed vectorz [0,1]din input spacez=g (y) =s(W y+b ) with ={W ,b }. The weight matrixW of thereverse mapping may optionally be constrained byW =WT, in which case the autoencoder is said tohavetied weights. Each trainingx(i)is thus mappedto a correspondingy(i)and a reconstructionz(i). Theparameters of this model are optimized to minimizetheaverage reconstruction error: ?