Transcription of VPLEX™ Overview and General Best Practices

1 H13545 best Practices VPLEX Overview and General best Practices Implementation Planning and best Practices Abstract This White Paper provides an Overview of VPLEX and General best Practices . It provides guidance for VS2 and VS6 hardware 2019 Revisions 2 VPLEX Overview and General best Practices | H13545 Revisions Date Description March 2019 Version 4 Acknowledgements This paper was produced by the following: Author: VPLEX CSE Team The information in this publication is provided as is. Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. Copyright March 2019 Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc.

2 Or its subsidiaries. Other trademarks may be trademarks of their respective owners. [ 5/16/2019] [ best Practices ] [ H13545] Table of contents 3 VPLEX Overview and General best Practices | H13545 Table of contents 2 Acknowledgements .. 2 Table of contents .. 3 Executive summary .. 4 1 VPLEX Overview .. 5 VPLEX Platform Availability and Scaling Summary .. 5 Data Mobility .. 5 Continuous Availability .. 6 Virtualization architecture .. 6 Storage/Service Availability .. 8 2 VPLEX Components .. 9 VPLEX Engine (VS2 and VS6) .. 9 Connectivity and I/O Paths .. 10 General Information .. 11 3 System 13 Metadata Volumes .. 13 Backup Policies and Planning .. 14 Logging Volumes .. 14 4 Requirements vs. Recommendations Table .. 16 Executive summary 4 VPLEX Overview and General best Practices | H13545 Executive summary EMC VPLEX represents the next-generation architecture for data mobility and continuous availability. This architecture is based on EMC s 20+years of expertise in designing; implementing and perfecting enterprise class intelligent cache and distributed data protection solutions.

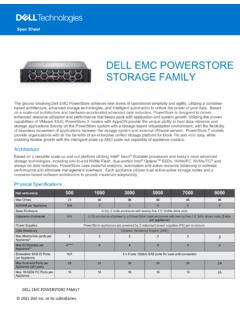

3 VPLEX addresses two distinct use cases: Data Mobility: The ability to move application and data across different storage installations within the same datacenter, across a campus, or within a geographical region. Continuous Availability: The ability to create a continuously available storage infrastructure across the same varied geographies with unmatched resiliency Access anywhere, protect everywhere VPLEX Overview 5 VPLEX Overview and General best Practices | H13545 1 VPLEX Overview VPLEX Platform Availability and Scaling Summary VPLEX addresses continuous availability and data mobility requirements and scales to the I/O throughput required for the front-end applications and back-end storage. Continuous availability and data mobility features are characteristics of both VPLEX Local and VPLEX Metro. A VPLEX VS2 cluster consists of one, two, or four engines (each containing two directors), and a management server. A dual-engine or quad-engine cluster also contains a pair of Fibre Channel switches for communication between directors within the cluster.

4 A VPLEX VS6 cluster consists of one, two, or four engines (each containing two directors), and dual integrated MMCS modules in Engine 1 that replace the management server. A dual-engine or quad-engine cluster also contains a pair of Infiniband switches for communication between directors within the cluster. Each engine is protected by a standby power supply (SPS) on the VS2 or integrated battery backup modules (BBU) on the VS6, and each internal switch gets its power through an uninterruptible power supply (UPS). (In a dual-engine or quad-engine cluster, the management server on VS2 also gets power from a UPS.) The management server has a public Ethernet port, which provides cluster management services when connected to the customer network. This Ethernet port also provides the point of access for communications with the VPLEX Witness. The MMCS modules on VS6 provide the same service as the management server on VS2. Both MMCS modules on engine 1 of the VS6 must be connected via their public Ethernet port to the customer network and allow communication over both ports.

5 This adds the requirement of having to allocate two IP addresses per cluster for the VS6. The GUI and CLI are accesses strictly through MMCS-A and the vplexcli is disabled on MMCS-B. VPLEX scales both up and out. Upgrades from a single engine to a dual engine cluster as well as from a dual engine to a quad engine are fully supported and are accomplished non-disruptively. This is referred to as scale up. Scale out upgrade from a VPLEX Local to a VPLEX Metro is also supported non-disruptively. Generational upgrades from VS2 to VS6 are also non-disruptive. Data Mobility Dell EMC VPLEX enables the connectivity to heterogeneous storage arrays providing seamless data mobility and the ability to manage storage provisioned from multiple heterogeneous arrays from a single interface within a data center. Data Mobility and Mirroring are supported across different array types and vendors. VPLEX Metro configurations enable migrations between locations over synchronous distances.

6 In combination with, for example, VMware and Distance vMotion or Microsoft Hyper-V, it allows you to transparently relocate Virtual Machines and their corresponding applications and data over synchronous distance. This provides you with the ability to relocate, share and balance infrastructure resources between data centers. All Directors in a VPLEX cluster have access to all Storage Volumes making this solution what is referred to as an N -1 architecture . This type of architecture allows for multiple director failures without loss of access to data down to a single director. VPLEX Overview 6 VPLEX Overview and General best Practices | H13545 During a VPLEX Mobility operation any jobs in progress can be paused or stopped without affecting data integrity. Data Mobility creates a mirror of the source and target devices allowing the user to commit or cancel the job without affecting the actual data. A record of all mobility jobs are maintained until the user purges the list for organizational purposes.

7 Migration Comparison One of the first and most common use cases for storage virtualization in General is that it provides a simple transparent approach for array replacement. Standard migrations off an array are time consuming due to the requirement of coordinating planned outages with all necessary applications that don t inherently have the ability to have new devices provisioned and copied to without taking an outage. Additional host remediation may be required for support of the new array which may also require an outage. VPLEX eliminates all these problems and makes the array replacement completely seamless and transparent to the servers. The applications continue to operate uninterrupted during the entire process. Host remediation is not necessary as the host continues to operate off the Virtual Volumes provisioned from VPLEX and is not aware of the change in the backend array. All host level support requirements apply only to VPLEX and there are no necessary considerations for the backend arrays as that is handled through VPLEX.

8 If the solution incorporates RecoverPoint and the RecoverPoint Repository, Journal and Replica volumes reside on VPLEX virtual volumes then array replacement is also completely transparent to even to RecoverPoint. This solution results in no interruption in the replication so there is no requirement to reconfigure or resynchronize the replication volumes. Continuous Availability Virtualization architecture Built on a foundation of scalable and continuously available multi-processor engines, EMC VPLEX is designed to seamlessly scale from small to large configurations. VPLEX resides between the servers and VPLEX Overview 7 VPLEX Overview and General best Practices | H13545 heterogeneous storage assets and uses a unique clustering architecture that allows servers at multiple data centers to have read/write access to shared block storage devices. Unique characteristics of this architecture include: Scale-out clustering hardware lets you start small and grow big with predictable service levels Advanced data caching utilizes large-scale cache to improve performance and reduce I/O latency and array contention Distributed cache coherence for automatic sharing, balancing, and failover of I/O across the cluster Consistent view of one or more LUNs across VPLEX clusters (within a data center or across synchronous distances) enabling new models of continuous availability and workload relocation With a unique scale-up and scale-out architecture , VPLEX advanced data caching and distributed cache coherency provide workload resiliency, automatic sharing, balancing, and failover of storage domains, and enables both local and remote data access with predictable service levels.

9 EMC VPLEX has been architected for multi-site virtualization enabling federation across VPLEX Clusters. VPLEX Metro supports max 10ms RTT, FC or 10 GigE connectivity. The nature of the architecture will enable more than two sites to be connected in the future. EMC VPLEX uses a VMware Virtual machine located within a separate failure domain to provide a VPLEX Witness between VPLEX Clusters that are part of a distributed/federated solution. This third site needs only IP connectivity to the VPLEX sites and a 3-way VPN will be established between the VPLEX management servers and the VPLEX Witness. Many solutions require a third site, with a FC LUN acting as the quorum disk. This must be accessible from the solution s node in each site resulting in additional storage and link costs. VPLEX Overview 8 VPLEX Overview and General best Practices | H13545 Storage/Service Availability Each VPLEX site has a local VPLEX Cluster and physical storage and hosts are connected to that VPLEX Cluster.

10 The VPLEX Clusters themselves are interconnected across the sites to enable continuous availability. A device is taken from each of the VPLEX Clusters to create a distributed RAID 1 virtual volume. Hosts connected in Site A actively use the storage I/O capability of the storage in Site A, Hosts in Site B actively use the storage I/O capability of the storage in Site B. Continuous Availability architecture VPLEX distributed volumes are available from either VPLEX cluster and have the same LUN and storage identifiers when exposed from each cluster, enabling true concurrent read/write access across sites. Essentially the distributed device seen at each site is the same volume with a global visibility. When using a distributed virtual volume across two VPLEX Clusters, if the storage in one of the sites is lost, all hosts continue to have access to the distributed virtual volume, with no disruption. VPLEX services all read/write traffic through the remote mirror leg at the other site.