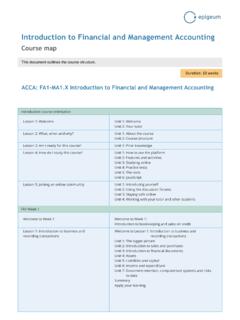

Transcription of Machine Learning Basic Concepts - edX

1 Machine LearningBasic ConceptsFeature'2'Feature'1'!"#$%&"'('!" #$%&"')'*"+,-,./' #&2'TerminologyMachine Learning , Data Science, Data Mining, Data Analysis, Sta-tistical Learning , Knowledge Discovery in Databases, Pattern everywhere! :processes 24 peta bytes of data per :10 million photos uploaded every :1 hour of video uploaded every :400 million tweets per :Satellite data is in hundreds of By 2020 the digital universe will reach The Digital Universe of Opportunities: Rich Data and theIncreasing Value of the Internet of Things, April s 44 trillion gigabytes!Data typesData comes in different sizes and also flavors (types): Texts Numbers Clickstreams Graphs Tables Images Transactions Videos Some or all of the above!

2 Smile, we are DATAFIED ! Wherever we go, we are datafied . Smartphones are tracking our locations. We leave a data trail in our web browsing. Interaction in social networks. Privacy is an important issue in Data Data Science processTimeDATA COLLECTIONS tatic expertise1345!DB%DBEDAMACHINE LEARNINGV isualizationDescriptive statistics, ClusteringResearch questions?Classification, scoring, predictive models, clustering, density estimation, etc. Data-driven decisionsApplication deploymentModel%(f)%Yes!/!90%!Predicted% class/risk%A!and!B!!!C!DashboardStatic PREPARATIONData!cleaning!+ + + + + - + + - - - - - - + Feature/variable!engineering!Application s of ML We all use it on a daily basis.

3 Examples: Machine Learning Spam filtering Credit card fraud detection Digit recognition on checks, zip codes Detecting faces in images MRI image analysis Recommendation system Search engines Handwriting recognition Scene classification fieldML!Statistics!Visualization!Economi cs! Databases! Signal processing! Engineering !Biology!ML versus StatisticsStatistics: Hypothesis testing Experimental design Anova Linear regression Logistic regression GLM PCAM achine Learning : Decision trees Rule induction Neural Networks SVMs Clustering method Association rules Feature selection Visualization Graphical models Genetic ~jhf/ Learning definition How do we create computer programs that improve with experi-ence?

4 Tom Learning definition How do we create computer programs that improve with experi-ence? Tom A computer program is said tolearnfrom experienceEwithrespect to some class of tasksTand performance measureP, ifits performance at tasks inT, as measured byP, improves withexperienceE. Tom Mitchell. Machine Learning vs. UnsupervisedGiven:Training data: (x1,y1),..,(xn,yn)/ xi Rdandyiis x1dy1 ..examplexi xidyi ..examplexn xndyn labelSupervised vs. UnsupervisedGiven:Training data: (x1,y1),..,(xn,yn)/ xi Rdandyiis x1dy1 ..examplexi xidyi ..examplexn xndyn labelSupervised vs. UnsupervisedUnsupervised Learning : Learning a model Learning : Learning a model LearningTraining data: examples.

5 ,xn, xi X Rn Clustering/segmentation:f:Rd {C1,..Ck}(set of clusters).Example: Find clusters in the population, fruits, learningFeature'2'Feature'1'Unsupervised learningFeature'2'Feature'1'Unsupervised learningFeature'2'Feature'1'Methods: K-means, gaussian mixtures, hierarchical clustering,spectral clustering, learningTraining data: examples xwith labels y.(x1,y1),..,(xn,yn)/ xi Rd Classification:yis discrete. To simplify,y { 1,+1}f:Rd { 1,+1}fis called abinary : Approve credit yes/no, spam/ham, Learning !"#$%&"'('!"#$%&"')'Supervised Learning !"#$%&"'('!"#$%&"')'*"+,-,./' #&2'Supervised Learning !"#$%&"'('!"#$%&"')'*"+,-,./' #&2'Methods:Support Vector Machines, neural networks, decisiontrees, K-nearest neighbors, naive Bayes, learningClassification:!

6 "#$%&"'('!"#$%&"')'!"#$%&"'('!"#$%&"')'! "#$%&"'('!"#$%&"')'!"#$%&"'('!"#$%&"')'! "#$%&"'('!"#$%&"')'Supervised learningNon linear classificationSupervised learningTraining data: examples xwith labels y.(x1,y1),..,(xn,yn)/ xi Rd Regression:yis a real value,y Rf:Rd Rfis called : amount of credit, weight of learningRegression:!"#$%&'($")"Example: Income in function of age, weight of the fruit in functionof its learningRegression:!"#$%&'($")"Supervise d learningRegression:!"#$%&'($")"Supervise d learningRegression:!"#$%&'($")"Training and Testing!"#$%$%&'()*'+,' "$*01'+/2).'345'Training and Testing!"#$%$%&'()*'+,' "$*01'+/2).'345'6%7/1)8''&)%2)"8''#&)8'' 4#1$.

7 9'(*#*:(8';$<7/2)'=")2$*'#1/:%*'>'=")2$*'9)(?%/'K-nearest neighbors Not every ML method builds a model! Our first ML method: KNN. Main idea: Uses thesimilaritybetween examples. Assumption: Two similar examples should have same labels. Assumes all examples (instances) are points in theddimen-sional neighbors KNN uses the standardEuclidian distanceto define two examplesxiandxj:d(xi,xj) = d k=1(xik xjk)2K-nearest neighborsTraining algorithm:Add each training example (x,y) to the Rd,y {+1, 1}.K-nearest neighborsTraining algorithm:Add each training example (x,y) to the Rd,y {+1, 1}.Classification algorithm:Given an examplexqto be classified.

8 SupposeNk(xq) is the set ofthe K-nearest neighbors ofxq. yq=sign( xi Nk(xq)yi)K-nearest neighbors3-NN. Credit: Introduction to Statistical neighbors3-NN. Credit: Introduction to Statistical : Draw an approximate decision boundary forK= 3?K-nearest neighborsCredit: Introduction to Statistical neighborsQuestion: What are the pros and cons of K-NN?K-nearest neighborsQuestion: What are the pros and cons of K-NN?Pros:+ Simple to implement.+ Works well in practice.+ Does not require to build a model, make assumptions, tuneparameters.+ Can be extended easily with news neighborsQuestion: What are the pros and cons of K-NN?

9 Pros:+ Simple to implement.+ Works well in practice.+ Does not require to build a model, make assumptions, tuneparameters.+ Can be extended easily with news :- Requires large space to store the entire training Slow! Givennexamples anddfeatures. The method takesO(n d) to Suffers from thecurse of of K-NN1. Information Handwritten character classification using nearest neighbor inlarge Recommender systems (user like you may like similar movies).4. Breast cancer Medical data mining (similar patient symptoms).6. Pattern recognition in and Testing!"#$%$%&'()*'+,' "$*01'+/2).'345'6%7/1)8''&)%2)"8''#&)8'' 4#1$.

10 9'(*#*:(8';$<7/2)'=")2$*'#1/:%*'>'=")2$*'9)(?%/'Question: How can we be confident aboutf?Training and Testing We calculateEtrainthe in-sample error (training error or em-pirical error/risk).Etrain(f) =n i=1`oss(yi,f(xi))Training and Testing We calculateEtrainthe in-sample error (training error or em-pirical error/risk).Etrain(f) =n i=1`oss(yi,f(xi)) Examples of loss functions: Classification error:`oss(yi,f(xi)) ={1ifsign(yi)6=sign(f(xi))0otherwiseTrai ning and Testing We calculateEtrainthe in-sample error (training error or em-pirical error/risk).Etrain(f) =n i=1`oss(yi,f(xi)) Examples of loss functions: Classification error:`oss(yi,f(xi)) ={1ifsign(yi)6=sign(f(xi))0otherwise Least square loss:`oss(yi,f(xi)) = (yi f(xi))2 Training and Testing We calculateEtrainthe in-sample error (training error or em-pirical error/risk).}}