Transcription of Neural nano-optics for high-quality thin lens imaging

1 ARTICLEN eural nano-optics for high-quality thin lensimagingEthan Tseng1,4, Shane Colburn2,4, James Whitehead2, Luocheng Huang2, Seung-Hwan Baek1,Arka Majumdar2,3& Felix Heide1 Nano-optic imagers that modulate light at sub-wavelength scales could enable new appli-cations in diverse domains ranging from robotics to medicine. Although metasurface opticsoffer a path to such ultra-small imagers, existing methods have achieved image quality farworse than bulky refractive alternatives, fundamentally limited by aberrations at largeapertures and low f-numbers. In this work, we close this performance gap by introducing aneural nano-optics imager. We devise a fully differentiable learning framework that learns ametasurface physical structure in conjunction with a Neural feature-based image recon-struction algorithm. Experimentally validating the proposed method, we achieve an order ofmagnitude lower reconstruction error than existing approaches. As such, we present a high-quality , nano-optic imager that combines the widestfield-of-view for full-color metasurfaceoperation while simultaneously achieving the largest demonstrated aperture of mm at anf-number of University, Department of Computer Science, Princeton, NJ, of Washington, Department of Electrical and Computer Engineering,Washington, WA, of Washington, Department of Physics, Washington, WA, authors contributed equally: Ethan Tseng andShane Colburn.

2 COMMUNICATIONS| (2021) 12:6493 | | ():,;The miniaturization of intensity sensors in recent decadeshas made today s cameras ubiquitous across many appli-cation domains, including medical imaging , commoditysmartphones, security, robotics, and autonomous driving. How-ever, imagers that are an order of magnitude smaller could enablenumerous novel applications in nano-robotics, in vivo imaging ,AR/VR, and health monitoring. While sensors with submicronpixels do exist, further miniaturization has been prohibited by thefundamental limitations of conventional optics. Traditionalimaging systems consist of a cascade of refractive elements thatcorrect for aberrations, and these bulky lenses impose a lowerlimit on camera footprint. A further fundamental barrier is adifficulty of reducing focal length, as this induces greater chro-matic turn towards computationally designed metasurface optics(meta-optics) to close this gap and enable ultra-compact camerasthat could facilitate new capabilities in endoscopy, brain imaging ,or in a distributed fashion as collaborative optical dust on scenesurfaces.

3 Ultrathin meta-optics utilize subwavelength nano-antennas to modulate incident light with greater design free-dom and space-bandwidth product over conventional diffractiveoptical elements (DOEs)1 4. Furthermore, the rich modal char-acteristics of meta-optical scatterers can support multifunctionalcapabilities beyond what traditional DOEs can do ( , polar-ization, frequency, and angle multiplexing). Meta-optics can befabricated using widely available integrated circuits foundrytechniques, such as deep ultraviolet lithography (DUV), withoutmultiple etch steps, diamond turning, or grayscale lithography asused in polymer-based DOEs or binary of these advantages, researchers have harnessed thepotential of meta-optics for buildingflat optics for imaging5 7,polarization control8, and holography9. Existing metasurface imagingmethods, however, suffer from an order of magnitude higherreconstruction error than achievable with refractive compound lensesdue to severe, wavelength-dependent aberrations that arise fromdiscontinuities in their imparted phase2,5,10 16.

4 Dispersion-engineeringaimstomitigatethis byexploitinggroupdelayandgroup delay dispersion to focus broadband light15 21,however,thistechnique is fundamentally limited to aperture designs of ~10s ofmicrons22. As such, existing approaches have not been able toincrease the achievable aperture sizes without significantly reducingthe numerical aperture or supported wavelength range. Otherattempted solutions only suffice for discrete wavelengths or nar-rowband illumination11 14, also exhibit strong geometric aberrations thathave limited their utility for widefield-of-view (FOV) that support wide FOV typically rely on either smallinput apertures that limit light collection24or use multiplemetasurfaces11, which drastically increases fabrication complex-ity. Moreover, these multiple metasurfaces are separated by a gapthat scales linearly with the aperture, thus obviating the sizebenefit of meta-optics as the aperture , researchers have leveraged computational imaging tooffload aberration correction to post-processing software10,25, these approaches enable full-color imaging meta-surfaces without stringent aperture limitations, they are limited toa FOV below 20 and the reconstructed spatial resolution is anorder of magnitude below that of conventional refractive , existing learned deconvolution methods27havebeen restricted to variants of standard encoder-decoder archi-tectures, such as the U-Net28, and often fail to generalize toexperimental measurements or handle large aberrations, as foundin broadband metasurface have proposed camera designs that utilize a single-optic instead of compound stacks29,30.

5 But these systems fail tomatch the performance of commodity imagers due to lowdiffraction efficiency. Moreover, the most successfulapproaches29 31hinder miniaturization because of their longback focal distances of more than 10 mm. Lensless cameras32instead reduce the size by replacing the optics with amplitudemasks, but this severely limits spatial resolution and requires longacquisition , a variety of inverse design techniques have beenproposed for meta-optics. Existing end-to-end optimization fra-meworks for meta-optics7,33,34are unable to scale to large aper-ture sizes due to prohibitive memory requirements and do notoptimize for thefinal full-color image quality, often relyinginstead on intermediary metrics such as focal spot this work, we propose Neural nano-optics , a high-quality ,polarization-insensitive nano-optic imager for full-color (400 to700 nm), wide FOV (40 ) imaging with anf-number of 2. Incontrast to previous works that rely on hand-crafted designs andreconstruction, we jointly optimize the metasurface and decon-volution algorithm with an end-to-end differentiable imageformation model.

6 The differentiability allows us to employfirst-order solvers, which have been popularized by deep learning, forjoint optimization of all parameters of the pipeline, from thedesign of the meta-optic to the reconstruction algorithm. Theimage formation model exploits a memory-efficient differentiablenano-scatterer simulator, as well as a Neural feature-basedreconstruction architecture. We outperform existing methodsby an order of magnitude in reconstruction error outside thenominal wavelength range on experimental metasurface proxy model. The proposed differ-entiable metasurface image formation model ( ) consists ofthree sequential stages that utilize differentiable tensor operations:metasurface phase determination, PSF simulation and convolu-tion, and sensor noise. In our model, polynomial coefficients thatdetermine the metasurface phase are optimizable variables,whereas experimentally calibrated parameters characterizing thesensor readout and the sensor-metasurface distance optimizable metasurface phase function as a function ofdistancerfrom the optical axis is given by r ni 0airR 2i; 1 where {a0.}

7 An} are optimizable coefficients,Ris the phase maskradius, andnis the number of polynomial terms. We optimizethe metasurface in this phase function basis as opposed to in apixel-by-pixel manner to avoid local minima. The number oftermsnis user-defined and can be increased to allow forfinercontrol of the phase profile, in the experiments we usedn=8. Weused even powers in the polynomial to impart a spatiallysymmetric PSF in order to reduce the computational burden, asthis allows us to simulate the full FOV by only simulating alongone axis. This phase, however, is only defined for a single,nominal design wavelength, which is afixed hyperparameter setto 452 nm in our optimization. While this mask alone is sufficientfor modeling monochromatic light propagation, we require thephase at all target wavelengths to design for a broadband this end, at each scatterer position in our metasurface, weapply two operations in sequence. Thefirst operation is aninverse, phase-to-structure mapping that computes the scatterergeometry given the desired phase at the nominal designwavelength.

8 With the scatterer geometry determined, we canthen apply a forward, structure-to-phase mapping to calculate thephase at the remaining target wavelengths. Leveraging an effectiveindex approximation that ensures a unique geometry for eachphase shift in the 0 to 2 range, we ensure differentiability, andARTICLENATURE COMMUNICATIONS | COMMUNICATIONS| (2021) 12:6493 | | directly optimize the phase coefficients by adjusting thescatterer dimensions and computing the response at differenttarget wavelengths. See Supplementary Note 4 for phase distributions differentiably determined from thenano-scatterers allow us to then calculate the PSF as a function ofwavelength andfield angle to efficiently model full-color imageformation over the whole FOV, see Supplementary Fig. 3. Finally,we simulate sensing and readout with experimentally calibratedGaussian and Poisson noise by using the reparameterization andscore-gradient techniques to enable backpropagation, see Supple-mentary Note 4 for a code researchers have designed metasurfaces by treating themas phase masks5,35, the key difference between our approach andprevious ones is that we formulate a proxy function that mimicsthe phase response of a scatterer under the local phaseapproximation, enabling us to use automatic differentiation forinverse compared directly against alternative computationalforward simulation methods, such asfinite-difference time-domain (FDTD) simulation33, our technique is approximate butis more than three orders of magnitudes faster and morememory-efficient.

9 For the same aperture as our design, FDTD simulation would require the order of 30 terabytes for accuratemeshing alone. Our technique instead only scales quadraticallywith length. This enables our entire end-to-end pipeline toachieve a memory reduction of over 3000 , with metasurfacesimulation and image reconstruction bothfitting within a fewgigabytes of GPU simulated and experimental phase profiles are shown and3. Note that the phase changes rapidly enough toinduce aliasing effects in the phase function; however, sincethe full profile is directly modeled in our framework these effectsare all incorporated into the simulation of the structure itself andare accounted for during feature propagation and learned nano-optics propose a Neural deconvolution method that incorporateslearned priors while generalizing to unseen test data. Specifically,we design a Neural network architecture that performs decon-volution on a learned feature space instead of on raw imageintensity.

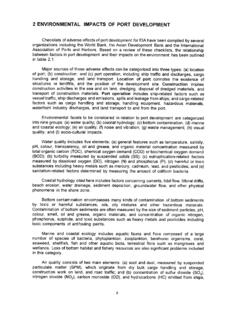

10 This technique combines both the generalization ofmodel-based deconvolution and the effective feature learning ofneural networks, allowing us to tackle image deconvolution formeta-optics with severe aberrations and PSFs with a large spatialextent. This approach generalizes well to experimental captureseven when trained only in proposed reconstruction network architecture comprisesthree stages: a multi-scale feature extractorfFE, a propagationstagefZ Wthat deconvolves these features ( , propagatesfeatures Z to their deconvolved spatial positions W), and adecoder stagefDEthat combines the propagated features into aFig. 1 Neural nano-optics end-to-end learned, ultrathin meta-optic as shown in (a) is 500 m in thickness and diameter, allowing for thedesign of a miniature camera. The manufactured optic is shown in (b). A zoom-in is shown in (c) and nanopost dimensions are shown in (d). Our end-to-end imaging pipeline shown ineis composed of the proposed efficient metasurface image formation model and the feature-based deconvolution the optimizable phase profile, our differentiable model produces spatially varying PSFs, which are then patch-wise convolved with the input image toform the sensor measurement.