Transcription of Transpose & Dot Product - Stanford University

1 Transpose & Dot ProductDef:Thetransposeof anm nmatrixAis then mmatrixATwhosecolumns are the rows : The columns ofATare the rows ofA. The rows ofATare the :IfA=[1 2 34 5 6], thenAT= 1 42 53 6 .Convention:From now on, vectorsv Rnwill be regarded as columns ( :n 1 matrices). Therefore,vTis a row vector (a 1 nmatrix).Observation:Letv,w Rn. ThenvTw=v w. This is because:vTw=[v1 vn] =v1w1+ +vnwn=v theory is concerned, the key property of transposes is the following:Prop :LetAbe anm nmatrix.

2 Then forx Rnandy Rm:(Ax) y=x (ATy).Here, is the dot Product of ExampleLetAbe a 5 3 matrix, soA:R3 R5. N(A) is a subspace of C(A) is a subspace ofThe transposeATis amatrix, soAT: C(AT) is a subspace of N(AT) is a subspace ofObservation:BothC(AT) andN(A) are subspaces of. Might therebe a geometric relationship between the two? (No, they re not equal.) :BothN(AT) andC(A) are subspaces of. Might there be ageometric relationship between the two? (Again, they re not equal.) ComplementsDef:LetV Rnbe a subspace.

3 Theorthogonal complementofVis thesetV ={x Rn|x v= 0 for everyv V}.So,V consists of the vectors which are orthogonal to every vector :IfV Rnis a subspace, thenV Rnis a inR3: The orthogonal complement ofV={0}isV =R3 The orthogonal complement ofV={z-axis}isV ={xy-plane} The orthogonal complement ofV={xy-plane}isV ={z-axis} The orthogonal complement ofV=R3isV ={0}Examples inR4: The orthogonal complement ofV={0}isV =R4 The orthogonal complement ofV={w-axis}isV ={xyz-space} The orthogonal complement ofV={zw-plane}isV ={xy-plane} The orthogonal complement ofV={xyz-space}isV ={w-axis} The orthogonal complement ofV=R4isV ={0}Prop :LetV Rnbe a subspace.

4 Then:(a) dim(V) + dim(V ) =n(b) (V ) =V(c)V V ={0}(d)V+V = (d) means: Every vectorx Rncan be written as a sumx=v+wwherev Vandw V . Also, it turns out that the expressionx=v+wis unique: that is, thereis only one way to writexas a sum of a vector inVand a vector inV .Meaning ofC(AT)andN(AT)Q:What doesC(AT) mean? Well, the columns ofATare the rows ofA. So:C(AT) = column space ofAT= span of columns ofAT= span of rows this reason: We callC(AT) therow :What doesN(AT) mean? Well:x N(AT) ATx=0 (ATx)T=0T xTA= , for anm nmatrixA, we see that:N(AT) ={x Rm|xTA=0T}.

5 For this reason: We callN(AT) theleft null among the SubspacesTheorem:LetAbe anm nmatrix. Then: C(AT) =N(A) N(AT) =C(A) Corollary:LetAbe anm nmatrix. Then: C(A) =N(AT) N(A) =C(AT) Prop :LetAbe anm nmatrix. Then rank(A) = rank(AT).Motivating Questions for ReadingProblem 1:Letb C(A). So, the system of equationsAx=bdoes havesolutions, possibly infinitely : What is the solutionxofAx=bwith x the smallest?Problem 2:Letb/ C(A). So, the system of equationsAx=bdoes nothave any solutions. In other words,Ax b6= : What is the vectorxthat minimizes the error Ax b ?

6 That is, whatis the vectorxthat comes closest to being a solution toAx=b? orthogonal ProjectionDef:LetV Rnbe a subspace. Then every vectorx Rncan be writtenuniquely asx=v+w,wherev Vandw V .Theorthogonal projectionontoVis the function ProjV:Rn Rngiven by: ProjV(x) =v. (Note that ProjV (x) =w.)Prop :LetV Rnbe a subspace. Then:ProjV+ ProjV = course, we already knew this: We havex=v+w= ProjV(x)+ProjV (x).Formula:Let{v1,..,vk}be a basis ofV Rn. LetAbe then kmatrixA= v1 vk .Then:ProjV=A(ATA) 1AT.

7 ( )Geometry Observations:LetV Rnbe a subspace, andx Rna vector.(1) The distance fromxtoVis: ProjV (x) = x ProjV(x) .(2) The vector inVthat is closest toxis: ProjV(x).Derivation of( ):Notice ProjV(x) is a vector inV= span(v1, .. ,vk) =C(A) = Range(A), andtherefore ProjV(x) =Ayfor some vectory notice thatx ProjV(x) =x Ayis a vector inV =C(A) =N(AT), which meansthatAT(x Ay) =0, which meansATx= , it turns out that our matrixATAis invertible (proof in L20), so we gety= (ATA) , ProjV(x) =Ay=A(ATA) 1 ATx. Minimum Magnitude SolutionProp :Letb C(A) (soAx=bhas solutions).

8 Then there existsexactly one vectorx0 C(AT) withAx0= : Among all solutions ofAx=b, the vectorx0has the smallest other words: There is exactly one vectorx0in the row space ofAwhichsolvesAx=b and this vector is the solution of smallest Find x0:Start with any solutionxofAx=b. Thenx0= ProjC(AT)(x).Least Squares ApproximationIdea:Supposeb/ C(A). So,Ax=bhas no solutions, soAx b6= want to find the vectorx which minimizes the error Ax b . Thatis, we want the vectorx for whichAx is the closest vector inC(A) other words, we want the vectorx for whichAx bis orthogonal toC(A).

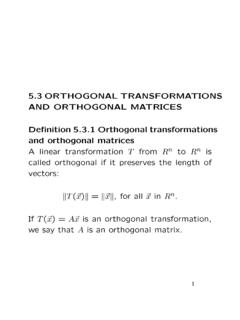

9 So,Ax b C(A) =N(AT), meaning thatAT(Ax b) =0, :ATAx = Forms (Intro)Given anm nmatrixA, we can regard it as a linear transformationT:Rn Rm. In the special case where the matrixAis asymmetric matrix,we can also regardAas defining a quadratic form :Def:LetAbe a symmetricn nmatrix. Thequadratic formassociatedtoAis the functionQA:Rn Rgiven by:QA(x) =x Ax( is the dot Product )=xTAx=[x1 xn]A Notice that quadratic forms are notlinear transformations!Orthonormal BasesDef:A basis{w1,..,wk}for a subspaceVis anorthonormal basisif:(1) The basis vectors are mutually orthogonal :wi wj= 0 (fori6=j);(2) The basis vectors are unit vectors:wi wi= 1.

10 ( : wi = 1)Orthonormal bases are nice for (at least) two reasons:(a) It is much easier to find theB-coordinates[v]Bof a vector when thebasisBis orthonormal;(b) It is much easier to find theprojection matrixonto a subspaceVwhen we have an orthonormal basis :Let{w1,..,wk}be an orthonormal basis for a subspaceV Rn.(a) Every vectorv Vcan be writtenv= (v w1)w1+ + (v wk)wk.(b) For allx Rn:ProjV(x) = (x w1)w1+ + (x wk)wk.(c) LetAbe the matrix with columns{w1,..,wk}. ThenATA=Ik, so:ProjV=A(ATA) 1AT= MatricesDef:Anorthogonal matrixis an invertible matrixCsuch thatC 1= :Let{v1.}