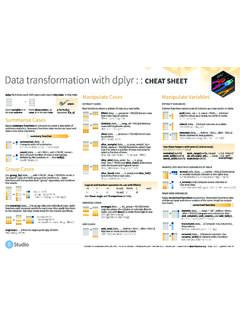

Transcription of Machine Learning Cheat Sheet - GitHub

1 Learning Cheat SheetClassical equations, diagrams and tricks in Machine learningMay 15, 2017ii 2013 soulmachineExcept where otherwise noted, This document is licensed under a Creative Commons Attribution-ShareAlike (CC ) license( ).PrefaceThis Cheat Sheet is a condensed version of Machine Learning manual, which contains many classical equations anddiagrams on Machine Learning , and aims to help you quickly recall knowledge and ideas in Machine Cheat Sheet has two significant symbols. Mathematical formulas use quite a lot of confusing symbols. For example,Xcan be a set, a randomvariable, or a matrix. This is very confusing and makes it very difficult for readers to understand the meaning ofmath formulas. This Cheat Sheet tries to standardize the usage of symbols, and all symbols are clearly pre-defined,see section . thinking jumps. In many Machine Learning books, authors omit some intermediary steps of a mathematicalproof process, which may save some space but causes difficulty for readers to understand this formula and readersget lost in the middle way of the derivation process.

2 This Cheat Sheet tries to keep important intermediary steps aswhere as .. vNotation.. ix1 Introduction.. Types of Machine Learning .. Three elements of a Machine learningmodel.. Representation.. Evaluation.. Optimization.. Some basic concepts.. Parametric vs non-parametricmodels.. A simple non-parametricclassifier: K-nearest Overfitting.. Cross validation.. Model selection..22 Probability.. Frequentists vs. Bayesians.. A brief review of probability theory.. Basic concepts.. Mutivariate random variables.. Bayes rule.. Independence and conditionalindependence.. Quantiles.. Mean and variance.. Some common discrete distributions.. The Bernoulli and binomialdistributions.. The multinoulli andmultinomial distributions.. The Poisson distribution.. The empirical distribution.

3 Some common continuous Gaussian (normal) Student s t-distribution.. The Laplace distribution.. The gamma distribution.. The beta distribution.. Pareto distribution.. Joint probability distributions.. Covariance and correlation.. Multivariate Gaussiandistribution.. Multivariate Student st-distribution.. Dirichlet distribution.. Transformations of random variables.. Linear transformations.. General transformations.. Central limit theorem.. Monte Carlo approximation.. Information theory.. Entropy.. KL divergence.. Mutual information..143 Generative models for discrete data.. Generative classifier.. Bayesian concept Learning .. Likelihood.. Prior.. Posterior.. Posterior predictive The beta-binomial model.. Likelihood.. Prior.. Posterior.. Posterior predictive The Dirichlet-multinomial model.

4 Likelihood.. Prior.. Posterior.. Posterior predictive Naive Bayes classifiers.. Optimization.. Using the model for The log-sum-exp trick.. Feature selection usingmutual information.. Classifying documents usingbag of words..224 Gaussian Models.. Basics.. MLE for a MVN.. Maximum entropy derivationof the Gaussian *.. Gaussian discriminant analysis.. Quadratic discriminantanalysis (QDA).. Linear discriminant analysis(LDA).. Two-class LDA.. MLE for discriminant Strategies for preventingoverfitting.. Regularized LDA *.. Diagonal LDA.. Nearest shrunken centroidsclassifier *.. Inference in jointly Gaussiandistributions.. Statement of the result.. Examples.. Linear Gaussian systems.. Statement of the result.. Digression: The Wishart distribution *.. Inferring the parameters of an MVN.

5 Posterior distribution ofm.. Posterior distribution ofS*.. Posterior distribution ofmandS*.. Sensor fusion with unknownprecisions *..305 Bayesian statistics.. Introduction.. Summarizing posterior distributions.. MAP estimation.. Credible intervals.. Inference for a difference inproportions.. Bayesian model selection.. Bayesian Occam s razor.. Computing the marginallikelihood (evidence).. Bayes factors.. Priors.. Uninformative priors.. Robust priors.. Mixtures of conjugate priors.. Hierarchical Bayes.. Empirical Bayes.. Bayesian decision theory.. Bayes estimators for commonloss functions.. The false positive vs falsenegative tradeoff..386 Frequentist statistics.. Sampling distribution of an estimator.. Bootstrap.. Large sample theory for theMLE *.. Frequentist decision theory.

6 Desirable properties of estimators.. Empirical risk minimization.. Regularized risk Structural risk minimization.. Estimating the risk usingcross validation.. Upper bounding the riskusing statistical learningtheory *.. Surrogate loss functions.. Pathologies of frequentist statistics *..397 Linear Regression.. Introduction.. Representation.. MLE.. OLS.. SGD.. Ridge regression(MAP).. Basic idea.. Numerically stablecomputation *.. Connection with PCA *.. Regularization effects of bigdata.. Bayesian linear regression..438 Logistic Regression.. Representation.. Optimization.. MLE.. MAP.. Multinomial logistic regression.. Representation.. MLE.. MAP.. Bayesian logistic regression.. Laplace approximation.. Derivation of the BIC.. Gaussian approximation forlogistic regression.

7 Approximating the posteriorpredictive.. Residual analysis (outlierdetection) *.. Online Learning and stochasticoptimization.. The perceptron algorithm.. Generative vs discriminative Pros and cons of each Dealing with missing data.. Fishers linear discriminantanalysis (FLDA) *..50 Prefacevii9 Generalized linear models and theexponential family.. The exponential family.. Definition.. Examples.. Log partition function.. MLE for the exponential Bayes for the exponentialfamily.. Maximum entropy derivationof the exponential family *.. Generalized linear models (GLMs).. Basics.. Probit regression.. Multi-task Learning ..5310 Directed graphical models (Bayes nets).. Introduction.. Chain rule.. Conditional independence.. Graphical models.. Directed graphical model.. Examples.

8 Naive Bayes classifiers.. Markov and hidden Markovmodels.. Inference.. Learning .. Learning from complete data.. Learning with missing and/orlatent variables.. Conditional independence propertiesof DGMs.. d-separation and the BayesBall algorithm (globalMarkov properties).. Other Markov properties ofDGMs.. Markov blanket and fullconditionals.. Multinoulli Learning .. Influence (decision) diagrams *..5711 Mixture models and the EM algorithm.. Latent variable models.. Mixture models.. Mixtures of Gaussians.. Mixtures of multinoullis.. Using mixture models forclustering.. Mixtures of experts.. Parameter estimation for mixture Unidentifiability.. Computing a MAP estimateis non-convex.. The EM algorithm.. Introduction.. Basic idea.. EM for GMMs.. EM for K-means.. EM for mixture of experts.. EM for DGMs with hiddenvariables.

9 EM for the Studentdistribution *.. EM for probit regression *.. Derivation of theQfunction.. Convergence of the EMAlgorithm *.. Generalization of EMAlgorithm *.. Online EM.. Other EM variants *.. Model selection for latent variablemodels.. Model selection forprobabilistic models.. Model selection fornon-probabilistic methods.. Fitting models with missing data.. EM for the MLE of an MVNwith missing data..6712 Latent linear models.. Factor analysis.. FA is a low rankparameterization of an MVN.. Inference of the latent factors.. Unidentifiability.. Mixtures of factor analysers.. EM for factor analysis Fitting FA models withmissing data.. Principal components analysis (PCA).. Classical PCA.. Singular value decomposition(SVD).. Probabilistic PCA.. EM algorithm for PCA.. Choosing the number of latentdimensions.

10 Model selection for Model selection for PCA.. PCA for categorical data.. PCA for paired and multi-view data.. Supervised PCA (latentfactor regression).. Discriminative supervised Canonical correlation Independent Component Analysis (ICA) Maximum likelihood The FastICA algorithm.. Using EM.. Other estimation principles *..7613 Sparse linear models.. 7714 Kernels.. Introduction.. Kernel functions.. RBF kernels.. TF-IDF kernels.. Mercer (positive definite)kernels.. Linear kernels.. Matern kernels.. String kernels.. Pyramid match kernels.. Kernels derived fromprobabilistic generative Using kernels inside GLMs.. Kernel machines.. L1 VMs, RVMs, and othersparse vector machines.. The kernel trick.. Kernelized KNN.. Kernelized K-medoidsclustering.. Kernelized ridge regression.. Kernel PCA.