Transcription of Recti er Nonlinearities Improve Neural Network Acoustic …

1 Rectifier Nonlinearities Improve Neural Network Acoustic ModelsAndrew L. Y. Y. Science Department, Stanford University, CA 94305 USAA bstractDeep Neural Network Acoustic models pro-duce substantial gains in large vocabu-lary continuous speech recognition work with rectified linear (ReL)hidden units demonstrates additional gainsin final system performance relative to morecommonly used sigmoidal Nonlinearities . Inthis work, we explore the use of deep rectifiernetworks as Acoustic models for the 300 hourSwitchboard conversational speech recogni-tion task. Using simple training procedureswithout pretraining, networks with rectifiernonlinearities produce 2% absolute reduc-tions in word error rates over their sigmoidalcounterparts. We analyze hidden layer repre-sentations to quantify differences in how ReLunits encode inputs as compared to sigmoidalunits. Finally, we evaluate a variant of theReL unit with a gradient more amenable tooptimization in an attempt to further im-prove deep rectifier IntroductionDeep Neural networks are quickly becoming a funda-mental component of high performance speech recog-nition systems.

2 Deep Neural Network (DNN) acousticmodels perform substantially better than the Gaus-sian mixture models (GMMs) typically used in largevocabulary continuous speech recognition (LVCSR).DNN Acoustic models were initially thought to performwell because of unsupervised pretraining (Dahl et al.,2011).However, DNNs with random initializationand sufficient amounts of labeled training data per-form equivalently. LVCSR systems with DNN acousticmodels have now expanded to use a variety of loss func-Proceedings of the 30thInternational Conference on Ma-chine Learning, Atlanta, Georgia, USA, :W&CP volume 28. Copyright 2013 by the author(s).tions during DNN training, and claim state-of-the-artresults on many challenging tasks in speech recogni-tion (Hinton et al., 2012; Kingsbury et al., 2012; Veselyet al., 2013).DNN Acoustic models for speech use several sigmoidalhidden layers along with a variety of initialization, reg-ularization, and optimization strategies.

3 There is in-creasing evidence from non-speech deep learning re-search that sigmoidal Nonlinearities may not be opti-mal for DNNs. Glorot et al. (2011) found that DNNswith rectifier Nonlinearities in place of traditional sig-moids perform much better on image recognition andtext classification tasks. Indeed, the advantage of rec-tifier networks was most obvious in tasks with an abun-dance of supervised training data, which is certainlythe case for DNN Acoustic model training in with rectifier Nonlinearities played an impor-tant role in a top-performing system for the ImageNetlarge scale image classification benchmark (Krizhevskyet al., 2012). Further, the nonlinearity used in purelyunsupervised feature learning Neural networks plays animportant role in final system performance (Coates &Ng, 2011).Recently, DNNs with rectifier Nonlinearities wereshown to perform well as Acoustic models for speechrecognition. Zeiler et al.

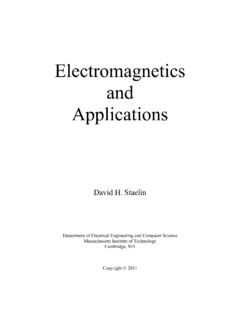

4 (2013) train rectifier networkswith up to 12 hidden layers on a proprietary voicesearch dataset containing hundreds of hours of trainingdata. After supervised training, rectifier DNNs per-form substantially better than their sigmoidal coun-terparts. Dahl et al. (2013) apply DNNs with rec-tifier Nonlinearities and dropout regularization to abroadcast news LVCSR task with 50 hours of train-ing data. Rectifier DNNs with dropout outperformsigmoidal networks without this work, we evaluate rectifier DNNs as acous-tic models for a 300-hour Switchboard conversationalLVCSR task. We focus on simple optimization tech-niques with no pretraining or regularization in orderto directly assess the impact of nonlinearity choice onRectifier Nonlinearities Improve Neural Network Acoustic Models 3 2 10123 1012 TanhReLLReLFigure functions used in Neural networkhidden layers. The hyperbolic tangent (tanh) function isa typical choice while some recent work has shown im-proved performance with rectified linear (ReL) leaky rectified linear function (LReL) has a non-zerogradient over its entire domain, unlike the standard system performance.

5 We evaluate multiple rec-tifier variants as there are potential trade-offs in hid-den representation quality and ease of optimizationwhen using rectifier nonlinearites. Further, we quanti-tatively compare the hidden representations of rectifierand sigmoidal networks. This analysis offers insight asto why rectifier Nonlinearities perform well. Relativeto previous work on rectifier DNNs for speech, this pa-per offers 1) a first evaluation of rectifier DNNs for awidely available LVCSR task with hundreds of hours oftraining data, 2) a comparison of rectifier variants, and3) a quantitative analysis of how different DNNs en-code information to further understand why rectifierDNNs perform well. Section 2 discusses motivationsfor rectifier Nonlinearities in DNNs. Section 3 presentsa comparison of several DNN Acoustic models on theSwitchbaord LVCSR task along with analysis of hid-den layer coding Rectifier NonlinearitiesNeural networks typically employ a sigmoidal nonlin-earity function.

6 Recently, however, there is increasingevidence that other types of nonlinearites can improvethe performance of DNNs. Figure 1 shows a typicalsigmoidal activation function, the hyperboloic tangent(tanh). This function serves as the point-wise nonlin-earity applied to all hidden units of a DNN. A singlehidden unit s activationh(i)is given by,h(i)= (w(i)Tx),(1)where ( ) is the tanh function,w(i)is the weight vec-tor for the ithhidden unit, andxis the input. Theinput is speech features in the first hidden layer, andhidden activations from the previous layer in deeperlayers of the activation function is anti-symmetric about 0 andhas a more gradual gradient than a logistic a result, it often leads to more robust optimiza-tion during DNN training. However, sigmoidal DNNscan suffer from thevanishing gradientproblem (Ben-gio et al., 1994). Vanishing gradients occur when lowerlayers of a DNN have gradients of nearly 0 becausehigher layer units are nearly saturated at -1 or 1, theasymptotes of the tanh function.

7 Such vanishing gradi-ents cause slow optimization convergence, and in somecases the final trained Network converges to a poorlocal minimum. Hidden unit weights in the networkmust therefore be carefully initialized as to preventsignificant saturation during the early stages of resulting DNN does not produce a sparse repre-sentation in the sense of hard zero sparsity when usingtanh hidden units. Many hidden units activate nearthe -1 asymptote for a large fraction of input patterns,indicating they are off. However, this behavior ispotentially less powerful when used with a classifierthan a representation where an exact 0 indicates theunit is off. The rectified linear (ReL) nonlinearity offers an alter-native to sigmoidal nonlinearites which addresses theproblems mentioned thus far. Figure 1 shows the ReLactivation function. The ReL function is mathemati-cally given by,h(i)= max(w(i)Tx,0) ={w(i)Tx w(i)Tx >00else.}

8 (2)When a ReL unit is activated above 0, its partialderivative is 1. Thus vanishing gradients do not ex-ist along paths of active hidden units in an arbitrarilydeep Network . Additionally, ReL units saturate at ex-actly 0, which is potentially helpful when using hiddenactivations as input features for a , ReL units are at a potential disadvantageduring optimization because the gradient is 0 wheneverthe unit is not active. This could lead to cases where aunit never activates as a gradient-based optimizationalgorithm will not adjust the weights of a unit thatnever activates initially. Further, like the vanishinggradients problem , we might expect learning to be slowwhen training ReL networks with constant 0 alleviate potential problems caused by the hard 0activation of ReL units, we additionally evaluateleakyrectified linear (LReL) hidden units. The leaky Recti -fier allows for a small, non-zero gradient when the unitis saturated and not active,Rectifier Nonlinearities Improve Neural Network Acoustic Modelsh(i)= max(w(i)Tx,0) ={w(i)Txw(i)Tx > (i)Txelse.}

9 (3)Figure 1 shows the LReL function, which is nearlyidentical to the standard ReL function. The LReLsacrifices hard-zero sparsity for a gradient which is po-tentially more robust during optimization. We experi-ment on both types of rectifier, as well as the sigmoidaltanh ExperimentsWe perform LVCSR experiments on the 300 hourSwitchboard conversational telephone speech corpus(LDC97S62). The baseline GMM system and forcedalignments for DNN training are created using theKaldi open-source toolkit (Povey et al., 2011). Weuse a system with 3,034 senones and train DNNs toestimate senone likelihoods in a hybrid HMM speechrecognition system. The input features for DNNs areMFCCs with a context window of +/- 3 frames. Per-speaker CMVN is applied as well as fMLLR. The fea-tures are dimension reduced with LDA to a final vec-tor of 300 dimensions and globally normalized to have0 mean and unit variance. Overall, the HMM/GMMsystem training largely follows an existing Kaldi recipeand we defer to that original work for details (Veselyet al.)

10 , 2013). For recognition evaluation, we use boththe Switchboard and CallHome subsets of the HUB52000 data (LDC2002S09).We are most interested in the effect of nonlinearitychoice on DNN performance. For this reason, we usesimple initialization and training procedures for DNNoptimization. We randomly initialize all hidden layerweights with a mean 0 uniform distribution. The scal-ing of the uniform interval is set based on layer sizeto prevent sigmoidal saturation in the initial Network (Glorot et al., 2011). The output layer is a standardsoftmax classifier, and cross entropy with no regular-ization serves as the loss function. We note that train-ing and development set cross entropies are closelymatched throughout training, suggesting that regular-ization is not necessary for the task. Networks are op-timized using stochastic gradient descent (SGD) withmomentum and a mini-batch size of 256 momentum term is initially given a weight of ,and increases to after 40,000 SGD iterations.