Transcription of 1 Acceptance-Rejection Method - Columbia University

1 Copyrightc 2007 by Karl Sigman1 Acceptance-Rejection MethodAs we already know, finding an explicit formula forF 1(y) for the cdf of a rvXwe wish togenerate,F(x) =P(X x), is not always possible. Moreover, even if it is, there may bealternative methods for generating a rv distributed asFthat is more efficient than the inversetransform Method or other methods we have come across. Here we present a very clever methodknown as theacceptance- rejection start by assuming that theFwe wish to simulate from has a probability density functionf(x); that is, the continuous case. Later we will give a discrete version too, which is very basic idea is to find an alternative probability distributionG, with density functiong(x),from which we already have an efficient algorithm for generating from ( , inverse transformmethod or whatever), but also such that the functiong(x) is close tof(x).

2 In particular, weassume that the ratiof(x)/g(x) is bounded by a constantc>0; supx{f(x)/g(x)} c. (Andin practice we would wantcas close to 1 as possible.)Here then is the algorithm for generatingXdistributed asF: Acceptance-Rejection Algorithm for continuous random variables1. Generate a rvYdistributed GenerateU(independent fromY).3. IfU f(Y)cg(Y),then setX=Y( accept ) ; otherwise go back to 1 ( reject ).Before we prove this and give examples, several things are noteworthy: f(Y) andg(Y) are rvs, hence so is the ratiof(Y)cg(Y)and this ratio is independent ofUinStep (2). The ratio is bounded between 0 and 1; 0<f(Y)cg(Y) 1. The number of timesNthat steps 1 and 2 need to be called ( , the number of iterationsneeded to successfully generateX) is itself a rv and has a geometric distribution with success probabilityp=P(U f(Y)cg(Y);P(N=n) = (1 p)n 1p,n 1.)

3 Thus on averagethe number of iterations required is given byE(N) = 1/p. In the end we obtain ourXas having the conditional distribution of aYgiven that theevent{U f(Y)cg(Y)} direct calculation yields thatp= 1/c, by first conditioning onY:P(U f(Y)cg(Y)|Y=y) =f(y)cg(y); thus, unconditioning and recalling thatYhas densityg(y) yieldsp= f(y)cg(y) g(y)dy1=1c f(y)dy=1c,where the last equality follows sincefis a density function (hence by definition integrates to1). ThusE(N) =c, the bounding constant, and we can now indeed see that it is desirableto choose our alternative densitygso as to minimize this constantc= supx{f(x)/g(x)}. Ofcourse the optimal function would beg(x) =f(x) which is not what we have in mind since thewhole point is to choose a different (easy to simulate) alternative fromf. In short, it is a bitof an art to find an appropriateg.

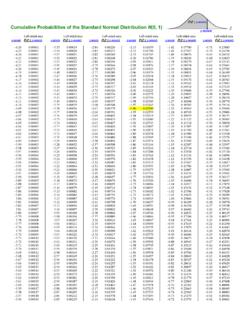

4 In any case, we summarize withThe expected number of iterations of the algorithm required until anXis successfullygenerated is exactly the bounding constantc= supx{f(x)/g(x)}.Proof that the algorithm worksProof :We must show that the conditional distribution ofYgiven thatU f(Y)cg(Y),is indeedF;that is, thatP(Y y|U f(Y)cg(Y)) =F(y). LettingB={U f(Y)cg(Y)},A={Y y}, recallingthatP(B) =p= 1/c, and then using the basic fact thatP(A|B) =P(B|A)P(A)/P(B) yieldsP(U f(Y)cg(Y)|Y y) G(y)1/c=F(y)cG(y) G(y)1/c=F(y),where we used the following computation:P(U f(Y)cg(Y)|Y y) =P(U f(Y)cg(Y),Y y)G(y)= y P(U f(y)cg(y)|Y=w y)G(y)g(w)dw=1G(y) y f(w)cg(w)g(w)dw=1cG(y) y f(w)dw=F(y)cG(y).2 Applications of the Acceptance-Rejection Normal distributionIf we desire anX N( , 2), then we can express it asX= Z+ , whereZdenotes a rvwith theN(0,1) distribution .

5 Thus it suffices to find an algorithm for generatingZ N(0,1).Moreover, if we can generate from the absolute value,|Z|, then by symmetry we can obtain ourZby independently generating a rvS(for sign) that is 1 with probability 1/2 and setting2Z=S|Z|. In other words we generate aUand setZ=|Z|ifU and setZ= |Z|ifU> |Z|is non-negative and has densityf(x) =2 2 e x2/2,x our alternative we will chooseg(x) =e x,x 0, the exponential density with rate1, something we already know how to easily simulate from (inverse transform Method , forexample). Noting thath(x)def=f(x)/g(x) =ex x2/2 2/ , we simply use calculus to compute itsmaximum (solveh (x) = 0); which must occur at that value ofxwhich maximizes the exponentx x2/2; namely at valuex= 1. Thusc= 2e/ , and sof(y)/cg(y) =e (y 1)2 algorithm for generatingZis then1. GenerateYwith an exponential distribution at rate 1; that is, generateUand setY= ln(U).

6 2. GenerateU3. IfU e (Y 1)2/2, set|Z|=Y; otherwise go back to GenerateU. SetZ=|Z|ifU , setZ= |Z|ifU> that in 3,U e (Y 1)2/2if and only if ln(U) (Y 1)2/2 and since ln(U) isexponential at rate 1, we can simplify the algorithm toAlgorithm for generatingZ N(0,1)1. Generate two independent exponentials at rate 1;Y1= ln(U1) andY2= ln(U2).2. IfY2 (Y1 1)2/2, set|Z|=Y1; otherwise go back to GenerateU. SetZ=|Z|ifU , setZ= |Z|ifU> a nice afterthought, note that by the memoryless property of the exponential distribution ,the amount by whichY2exceeds (Y 1)2/2 in Step 2 of the algorithm whenY1is accepted, thatis, the amountYdef=Y2 (Y1 1)2/2, has itself the exponential distribution with rate 1 andis independent ofY1. Thus, for free, we get back an independent exponential which could thenbe used as one of the two needed in Step 1, if we were to want to start generating yet anotherindependentN(0,1) rv.

7 Thus, after repeated use of this algorithm, the expected number ofuniforms required to generate oneZis (2c+ 1) 1 = 2c= might ask if we could improve our algorithm forZby changing the rate of the expo-nential; that is, by using an exponential densityg(x) = e xfor some 6= 1. The answer isno: = 1 minimizes the value ofcobtained (as can be proved using elementary calculus). Beta distributionIn general, a beta distribution on the unit interval,x (0,1), has a density of the formf(x) =bxn(1 x)mwithnandmnon-negative (integers or not). The constantbis thenormalizing constant,b=[ 10xn(1 x)mdx] 1.(Such distributions generalize the uniform distribution and are useful in modeling randomproportions.)Let us consider a special case of this:f(x) =bxn(1 x)n=b(x(1 x))n. Like the uniformdistribution on (0,1), this has mean 1/2, but its mass is more concentrated near 1/2 than near0 or 1; it has a smaller variance than the uniform.

8 If we useg(x) = 1,x (0,1) (the uniformdensity), thenf(x)/g(x) =f(x) and as is easily verified,c= supxf(x) is achieved atx= 1/2;c=b(1/4) we can generate fromf(x) =b(x(1 x))nas follows:1. IfU2 4n(U1(1 U1))n, then setX=U1; otherwise go back to is interesting to note that in this example, and in fact in any beta example usingg(x) = 1,we do not need to know (or compute in advance) the value ofb; it cancels out in the ratiof(x)/cg(x). Of course if we wish to know the value ofc, we would need to know the value ofb. In the case whennandmare integers,bis given byb= (n+m+ 1)!/n!m!, which in ourexample yieldsb=(2n+1n), and soc=(2n+1n)/4n. For large values ofn, this is not so efficient,as should be clear since in that case the two densitiesfandgare not very are other ways to generate the beta distribution . For example one can use thebasic fact (can you prove this?)

9 That ifX1is a gamma rv with shape parametern+ 1, andindependentlyX2is a gamma rv with shape parameterm+ 1 (and both have the same scaleparameter), thenX=X1/(X1+X2) is beta with densityf(x) =bxn(1 x)m. So it suffices tohave an efficient algorithm for generating the gamma distribution . In general, whennandmare integers, the gammas become Erlang (represented by sums of iid exponentials); for example,ifX1andX2are iid exponentials, thenX=X1/(X1+X2) is uniform on (0,1).The gamma distribution can always be simulated using Acceptance-Rejection by using theexponential densityg(x) = e xin which 1/ is chosen as the mean of the gamma (it can beshown that this value of is optimal).3 Discrete caseThe discrete case is analogous to the continuous case. Suppose we want to generate anXthat is a discrete rv with probability mass function (pmf)p(k) =P(X=k).

10 Further supposethat we can already easily generate a discrete rvYwith pmfq(k) =P(Y=k) such thatsupk{p(k)/q(k)} c< . The following algorithm yields ourX: Acceptance-Rejection Algorithm for discrete random variables1. Generate a rvYdistributed asq(k).2. GenerateU(independent fromY).3. IfU p(Y)cq(Y),then setX=Y; otherwise go back to