Transcription of YADA Manual - Computational Details - TeXlips

1 YADA Manual Computational Details Anders Warne October 26, 2018. Abstract: YADA (Yet Another Dsge Application) is a Matlab program for Bayesian estimation and evaluation of Dynamic Stochastic General Equilibrium and vector autoregressive models. This pa- per provides the mathematical Details for the various functions used by the software. First, some rather famous examples of DSGE models are presented and all these models are included as ex- amples in the YADA distribution. YADA supports a number of di erent algorithms for solving log-linearized DSGE models. The fastest algorithm is the so called Anderson-Moore algorithm (AiM), but the approaches of Klein and Sims are also covered and have the benefit of being nu- merically more robust in certain situations. The AiM parser is used to translate the DSGE model equations into a structural form that the solution algorithms can make use of. The solution of the DSGE model is expressed as a VAR(1) system that represents the state equations of the state-space representation.

2 Thereafter, the di erent prior distributions that are supported, the state-space rep- resentation and the Kalman filter used to evaluate the log-likelihood are presented. Furthermore, it discusses how the posterior mode is computed, including how the original model parameters can be transformed internally to facilitate the posterior mode estimation. Next, the paper provides some Details on the algorithms used for sampling from the posterior distribution: single block and multiple fixed or random block random walk Metropolis and slice sampling algorithms, as well as sequential Monte Carlo. In order to conduct inference based on the draws from the posterior sampler, tools for evaluating convergence are considered next. We are here concerned both with simple graphical tools, as well as formal tools for single and parallel chains. Di erent methods for estimating the marginal likelihood are considered thereafter. Such estimates may be used to eval- uate posterior probabilities for di erent DSGE models.

3 Various tools for evaluating an estimated DSGE model are provided, including impulse response functions, forecast error variance decom- positions, historical forecast error and observed variable decompositions. Forecasting issues, such as the unconditional and conditional predictive distributions, are examined in the following sec- tion. The paper thereafter considers frequency domain analysis, such as a decomposition of the population spectrum into shares explained by the underlying structural shocks. Estimation of a VAR model with a prior on the steady state parameters is also discussed. The main concerns are: prior hyperparameters, posterior mode estimation, posterior sampling via the Gibbs sampler, and marginal likelihood calculation (when the full prior is proper), before the topic of forecasting with Bayesian VARs is considered. Next, the paper turns to the important topic of misspecification and goodness-of-fit analysis, where the DSGE-VAR framework is considered in some detail.

4 Finally, the paper provides information about the various types of input that YADA requires and how these inputs should be prepared. Remarks: Copyright c 2006 2018 Anders Warne, Forecasting and Policy Modelling Division, European Central Bank. I have received valuable comments and suggestions by past and present members of the NAWM team: Kai Christo el, G nter Coenen, Jos Emilio Gumiel, Roland Straub, Michal Andrle ( esk N rodn Banka, IMF), Juha Kilponen (Suomen Pankki), Igor Vetlov (Lietuvos Bankas, ECB, Deutsche Bundesbank), and Pascal Jacquinot, as well as our consultant from Sveriges Riksbank, Malin Adolfson. A special thanks goes to Mattias Villani for his patience when trying to answer all my questions on Bayesian analysis. I have also benefitted greatly from a course given by Frank Schorfheide at the ECB in November 2005. Moreover, I am grateful to Juan Carlos Mart nez-Ovando (Banco de M xico) for suggesting the slice sampler, and to Paul McNelis (Fordham University) for sharing his dynare code and helping me out with the Fagan-Lothian-McNelis DSGE example.

5 And last but not least, I am grateful to Magnus Jonsson, Stefan Las en, Ingvar Strid and David Vestin at Sveriges Riksbank, to Antti Ripatti at Soumen Pankki, and to Tobias Blattner, Boris Glass, Wildo Gonz lez, Tai-kuang Ho, Markus Kirchner, Mathias Trabandt, and Peter Welz for helping me track down a number of unpleasant bugs and to improve the generality of the YADA code. Finally, thanks to Dave Christian for the Kelvin quote. Contents 1. Introduction .. 10. 2. DSGE Models .. 14. The An and Schorfheide Model .. 14. A Small Open Economy DSGE Model: The Lubik and Schorfheide Example .. 15. A Model with Money Demand and Money Supply: Fagan, Lothian and McNelis .. 16. A Medium-Sized Closed Economy DSGE Model: Smets and Wouters .. 19. The Sticky Price and Wage Equations .. 19. The Flexible Price and Wage Equations .. 21. The Exogenous Variables .. 22. The Steady-State Equations .. 22. The Measurement Equations .. 23. Smets and Wouters Model with Stochastic Detrending.

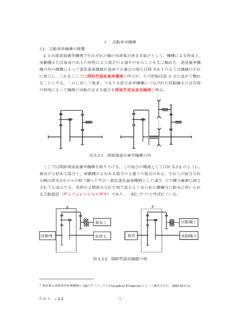

6 23. A Small-Scale Version of the Smets and Wouters Model .. 26. The Smets and Wouters Model with Financial Frictions .. 26. Augmented Measurement Equations .. 27. The Steady-State in the Smets and Wouters Model with Financial Frictions .. 28. Preliminaries: The Log-Normal Distribution.. 28. Steady-State Elasticities .. 29. The Smets and Wouters Model with Unemployment .. 31. The Leeper, Plante and Traum Model for Fiscal Policy Analysis .. 35. The Log-Linearized Dynamic Equations .. 35. The Steady-State Equations .. 37. Measurement Equations.. 38. 3. Solving a DSGE Model .. 40. The DSGE Model Specification and Solution .. 40. The Klein Approach .. 43. The Sims Approach .. 44. Solving a DSGE Model Subject to a Zero Lower Bound Constraint .. 45. The Zero Lower Bound .. 45. Anticipated Shocks.. 46. Solving the DSGE Model with the Klein Solver.. 46. Policy Rate Projections and the Complementary Slackness Condition .. 48. The Forward-Back Shooting Algorithm .. 49. Stochastic Simulations.

7 50. A Structural Form Representation of the Zero Lower Bound Solution .. 51. YADA Code .. 51. AiMInitialize .. 52. AiMSolver .. 53. AiMtoStateSpace .. 53. KleinSolver.. 53. SimsSolver .. 53. ZLBK leinSolver .. 54. ForwardBackShootingAlgorithm .. 54. 4. Prior and Posterior Distributions .. 55. Bayes Theorem .. 55. Prior Distributions .. 55. Monotonic Functions of Continuous Random Variables.. 56. The Gamma and Beta Functions .. 57. Gamma, 2 , Exponential, Erlang and Weibull Distributions .. 57. Inverted Gamma and Inverted Wishart Distributions .. 59. Beta, Snedecor (F), and Dirichlet Distributions .. 61. Normal and Log-Normal Distributions .. 63. 2 . Left Truncated Normal Distribution .. 63. Uniform Distribution .. 64. Student-t and Cauchy Distribution .. 64. Logistic Distributions .. 66. Gumbel Distribution .. 67. Pareto Distribution.. 68. Discussion .. 68. Random Number Generators .. 69. System Priors .. 71. YADA Code .. 72. logGammaPDF .. 72. logInverseGammaPDF.

8 72. logBetaPDF .. 72. logNormalPDF .. 72. logLTNormalPDF .. 72. logUniformPDF .. 72. logStudentTAltPDF .. 73. logCauchyPDF .. 73. logLogisticPDF .. 73. logGumbelPDF .. 73. logParetoPDF .. 73. PhiFunction .. 73. GammaRndFcn .. 73. InvGammaRndFcn .. 73. BetaRndFcn .. 74. NormalRndFcn .. 74. LTNormalRndFcn .. 74. UniformRndFcn .. 74. StudentTAltRndFcn .. 74. CauchyRndFcn .. 75. MultiStudentTRndFcn .. 75. LogisticRndFcn .. 75. GumbelRndFcn .. 75. ParetoRndFcn .. 75. 5. The Kalman Filter .. 76. The State-Space Representation .. 76. The Kalman Filter Recursion .. 77. Initializing the Kalman Filter .. 78. The Likelihood Function .. 79. Smoothed Projections of the State Variables .. 79. Smoothed and Updated Projections of State Shocks and Measurement Errors .. 81. Multistep Forecasting .. 83. Covariance Properties of the Observed and the State Variables .. 83. Computing Weights on Observations for the State Variables.. 84. Weights for the Forecasted State Variable Projections.

9 84. Weights for the Updated State Variable Projections .. 85. Weights for the Smoothed State Variable Projections .. 85. Simulation Smoothing .. 86. Chandrasekhar Recursions .. 87. Square Root Filtering .. 89. Missing Observations .. 90. Di use Initialization of the Kalman Filter .. 91. Di use Kalman Filtering .. 92. Di use Kalman Smoothing .. 94. 3 . A Univariate Approach to the Multivariate Kalman Filter .. 96. Univariate Filtering and Smoothing with Standard Initialization.. 96. Univariate Filtering and Smoothing with Di use Initialization .. 97. Observation Weights for Unobserved Variables under Di use Initialization .. 100. Weights for the Forecasted State Variables under Di use Initialization .. 100. Weights for the Updated State Variable Projections under Di use Initialization . 102. Weights for the Smoothed State Variable Projections under Di use Initialization102. YADA Code .. 104. KalmanFilter(Ht)..104. UnitRootKalmanFilter(Ht) .. 105. ChandrasekharRecursions.

10 106. StateSmoother(Ht) .. 106. SquareRootKalmanFilter(Ht)..106. UnitRootSquareRootKalmanFilter(Ht) .. 106. SquareRootSmoother(Ht)..106. UnivariateKalmanFilter(Ht).. 107. UnitRootUnivariateKalmanFilter(Ht) .. 107. UnivariateStateSmoother .. 107. KalmanFilterMO(Ht) .. 107. UnitRootKalmanFilterMO(Ht)..107. SquareRootKalmanFilterMO(Ht) .. 107. UnitRootSquareRootKalmanFilterMO(Ht) .. 108. UnivariateKalmanFilterMO(Ht) .. 108. UnitRootUnivariateKalmanFilterMO(Ht) .. 108. StateSmootherMO(Ht) .. 108. SquareRootSmootherMO(Ht) .. 108. UnivariateStateSmootherMO .. 108. DiffuseKalmanFilter(MO)(Ht) .. 108. DiffuseSquareRootKalmanFilter(MO)(Ht) .. 109. DiffuseUnivariateKalmanFilter(MO)(Ht) .. 109. DiffuseStateSmoother(MO)(Ht)..109. DiffuseSquareRootSmoother(MO)(Ht) .. 109. DiffuseUnivariateStateSmoother(MO)..109. DoublingAlgorithmLyapunov .. 109. 6. Parameter Transformations .. 111. Transformation Functions for the Original Parameters .. 111. The Jacobian Matrix .. 111. YADA Code.